DOGE’s AI-Driven Federal Data Access: Innovation or Cybersecurity Nightmare?

Let’s chat about something that’s been popping up everywhere: DOGE’s use of AI to manage federal data. If DOGE’s new to you, it’s the Department of Government Efficiency, headed by Elon Musk under the Trump administration.

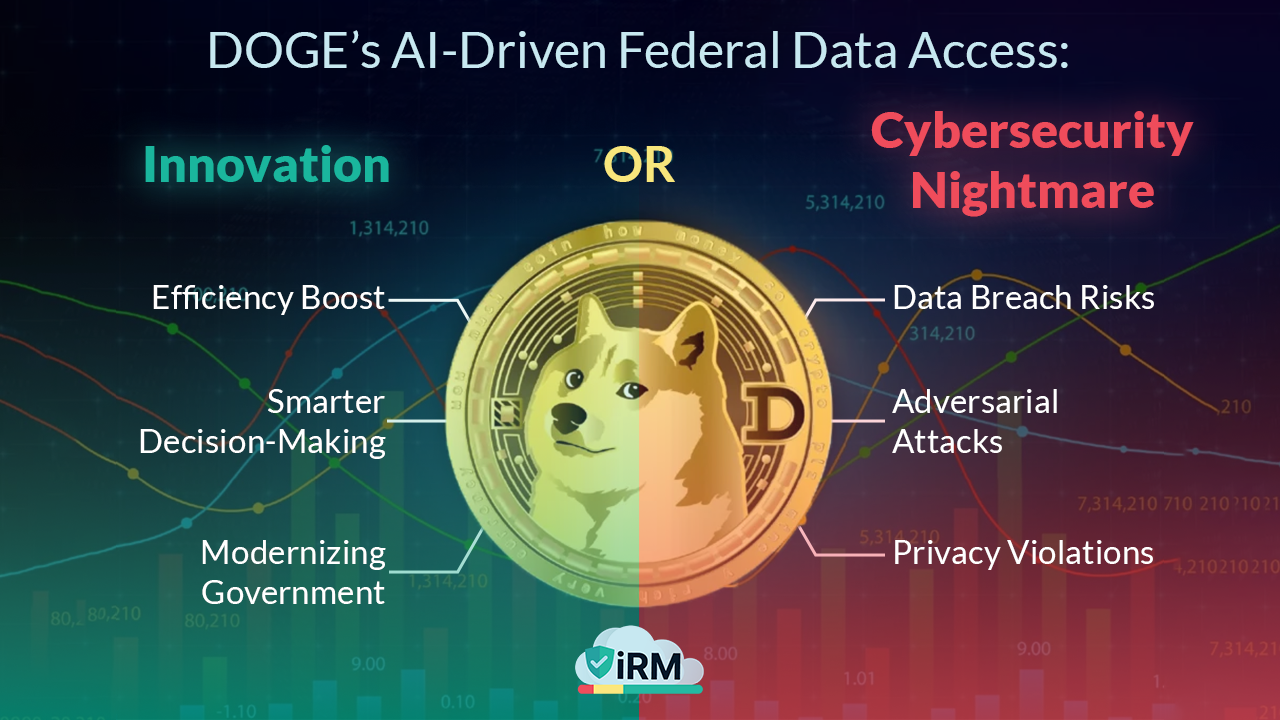

They’re using artificial intelligence to dig into sensitive federal info—like Social Security numbers and bank details—to make the government work better. Pretty neat, huh? But wait—some people are sounding the alarm, worried this could lead to major cybersecurity trouble. So, is this a brilliant idea or a risky move? Let’s walk through it together, like we’re catching up over coffee.

What Is DOGE Doing with AI?

Let’s start with the basics: what’s DOGE all about? Their mission is simple—make the government more efficient and save some cash. They’re using AI to sift through data from federal agencies, like the Treasury or Department of Education. We’re talking personal stuff here—your address, income, or banking info.

The plan is to spot waste, trim costs, and speed things up. But here’s the twist: DOGE reportedly has broad access to this data, and that’s got folks jittery. It’s like giving someone your house key—you’d hope they’re careful with it, right?

Why AI Could Be a Game-Changer

So, why go with AI? It’s got some serious perks. Picture a tool that can crunch numbers, find issues, and offer solutions faster than anyone could. For example, the Cybersecurity and Infrastructure Security Agency (CISA) has used AI to beef up cyber defenses—and it’s paid off. If AI can handle hackers, it might also streamline things like managing taxpayer funds or processing loans quickly. The big win here is efficiency—taking slow, outdated systems and giving them a high-tech kick.

The Cybersecurity Risks

Now, let’s flip the coin—things could get dicey. Using AI with sensitive data has its dangers, and they’re not minor. Hackers could mess with the system, like slipping in fake data to throw off results—that’s called data poisoning. Or they could trick the AI with adversarial attacks, making it spit out wrong answers.

Reports say DOGE uses cloud setups like Azure, which are handy but also prime targets for cyberattacks. Remember the 2015 Office of Personnel Management breach that leaked millions of records? That’s proof federal data can be vulnerable. It’s like leaving your bike unlocked downtown—convenient until it’s gone.

Privacy Worries: Are We Exposed?

Then there’s privacy, which hits close to home. Laws like the Privacy Act of 1974 are meant to shield our personal info, but there’s chatter about whether DOGE is playing by the rules. A lawsuit even claims they’ve overstepped by grabbing data without proper checks.

Imagine someone rifling through your private stuff without asking—that’s the feeling here. If word gets out that our info’s at risk, trust in the government could crumble, and that’s no small thing.

How Can We Keep This Safe?

So, how do we dodge disaster? There are ways to lock it down. Here’s a quick list of fixes:

- Encryption: Scramble the data so only the right people can see it.

- Anonymization: Strip out personal details so the AI sees trends, not names.

- Regular checks: Keep tabs on the system to spot problems early.

Tech alone won’t cut it, though—we need rules too. Strong policies and teamwork with tech pros and scholars could make a difference. It’s like fixing a leaky roof—you need good tools and someone who knows how to use them.

Weighing the Good and the Bad

Let’s weigh it out. On one side, AI could save billions and speed up government services—big pluses. On the flip side, there’s the chance of breaches, legal trouble, and privacy woes.

Experts are divided: some see it as the future, cheering the possibilities; others wave warning flags, pointing to the pitfalls. It’s a tough one, like deciding whether to splurge on a fancy gadget—great features, but what if it breaks?

The Legal and Ethical Side

Legally and ethically, it’s a bit of a maze. Here’s what’s at play:

- Laws lagging behind: Rules like the Federal Information Security Management Act (FISMA) set standards, but AI’s moving faster than they can catch up.

- Fairness matters: How do we know AI’s decisions are fair if we can’t see inside the process?

- Our rights: Shouldn’t we decide how our data is used, like saying yes or no?

It’s about keeping things open and fair—ensuring AI doesn’t turn into a mystery box we can’t crack.

Public and Private Team-Up

DOGE isn’t solo—they’re partnering with private companies, which spices things up. These tie-ins bring top-notch tech, which is fantastic. But there’s a snag: what if those companies cash in on our data? That’s raised eyebrows about fairness. Done well, though, it’s a powerhouse combo—think of it like teaming up with a buddy who’s a whiz at cooking. You want their skills, but you also want to split the meal evenly.

What’s the Bottom Line?

So, what’s the takeaway? DOGE’s AI push is a mixed bag. It could make the government sharper and leaner—awesome stuff. But the risks—cyber threats, privacy slips, legal fuzziness—are real and loud. It’s not a straight answer; it’s about finding a middle ground. We’ve got to keep pushing for answers and safety nets. What’s your take—can we make this work without a meltdown?

Now, picture this: you’re the captain of a spaceship, and DOGE’s AI is your shiny new engine. It could zoom you to the stars—or crash you into an asteroid. What’s your move?

Beam your thoughts over to iRM’s Contact Us let’s spark a galactic chat about keeping this tech adventure safe and stellar. Your voice could steer us to the perfect orbit, so don’t hold back!

.png)